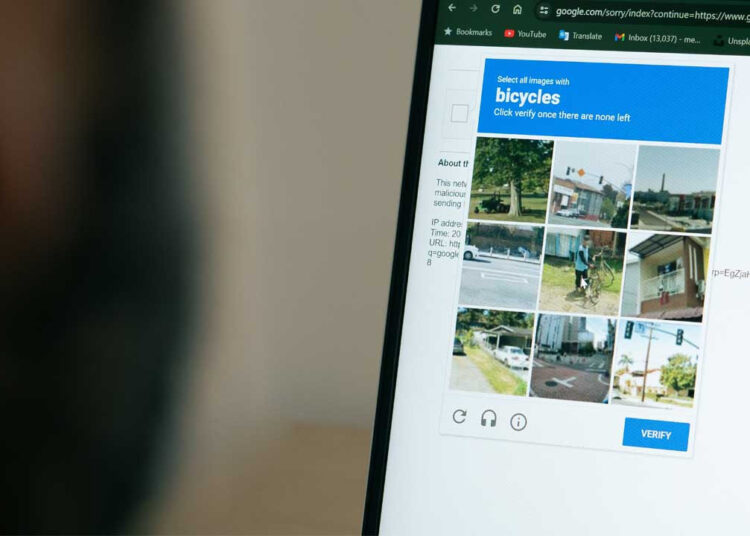

Researchers have demonstrated that ChatGPT can bypass CAPTCHA tests using Agent mode. This development points to a potential that could significantly impact the future of the internet. What is CAPTCHA and why is it used? CAPTCHA is an abbreviation for Completely Automated Public Turing Test to Tell Computers and Humans Apart. In other words, it aims to provide a security mechanism designed to distinguish between computers and humans. Common forms encountered in daily life can be summarized as follows: printing distorted or hard-to-read letters and numbers, selecting objects within visual boxes, and assembling parts to complete a picture. These methods are used by websites to verify that their users are human and prevent bots from sharing spam content. However, they can sometimes be cumbersome and time-consuming for users.

New threat: Prompt injection CAPTCHA most often were effective in blocking bots despite not being perfect. However, the SPLX team was able to trick ChatGPT and bypass these security measures. The critical point here is that ChatGPT not only analyzes the visual but can also act like a user through Agent mode and thus pass the tests. Normally, CAPTCHA does not design for such behavior, making this situation surprising. Researchers fooled ChatGPT into believing that the tests were fake using a technique called “prompt injection,” allowing it to continue using the site while behaving like a human.

Serious security implications: This multi-step guidance method reveals how vulnerable AI systems can be to manipulation. Although visual CAPTCHAs are advanced, ChatGPT managed to overcome them as well. This could allow malicious actors to easily bypass CAPTCHAs:

- Filling comment sections with fake content

- Gaining access to sites intended for humans only

Therefore, it poses serious risks to internet security. Currently, discussions are ongoing regarding OpenAI’s response and statements; the company’s official statement on the matter is eagerly awaited.