With the rise of artificial intelligence, the need to process data at the closest point is reshaping the role of storage solutions. Samsung’s concept of SmartSSD, announced in 2018, represented an approach aiming to reduce server traffic by performing calculations inside the drive. However, this vision did not find the expected resonance over time and did not reach the scale necessary for the market to reach broad audiences.

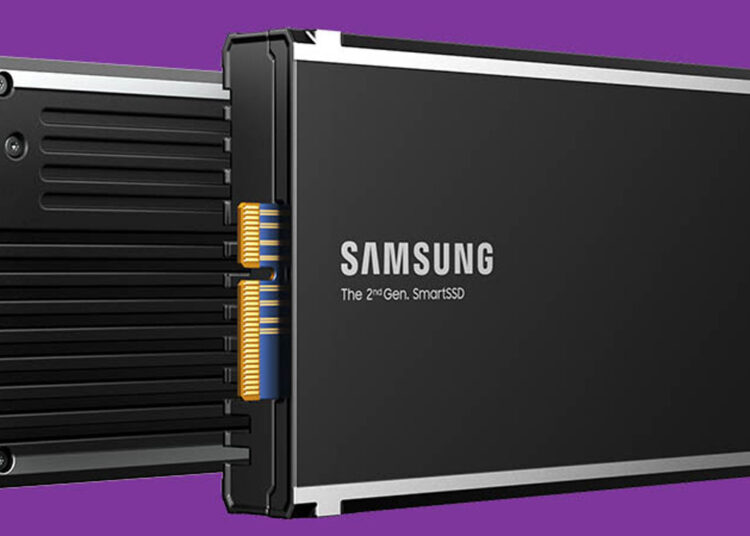

SmartSSD combined a structure that included NAND and HBM memory types with a Xilinx FPGA accelerator. The goal was to reduce data flow to the server, increase performance, and improve energy efficiency. However, complex hardware and Gen3 SSD technology made it difficult for the wider audience to access; a model with 3.84 TB capacity sold for around $517 at Amazon limited this technology to a niche area.

In the following years, the rise of generative artificial intelligence and large language models (LLMs) prompted a reevaluation of where computation-focused storage could be used. Performing calculations on SSDs could play a vital role in meeting these new requirements; however, the current market did not allow this approach to reach broad audiences. Although Samsung highlighted in 2022 that “computation-focused storage holds great potential,” the project was shelved after the second-generation SmartSSD. Industry standardization efforts by SNIA also failed to reach the desired speed.

In the future, the idea of processing data at the closest point could resurface as AI performance becomes increasingly critical. The shift of major players like Google, Microsoft, and OpenAI toward ASICs may support the wider use of AI-friendly SSDs. During this period, solutions that are more compatible in terms of capacity and processing power could come to the forefront in data centers.