Newly developed large language models (LLMs) requiring high-quality training data are leading companies to crawl the internet to gather data. In this process, the risk of sensitive user information leaking into the model may increase. The Google Research team is working on new methods to prevent LLMs from memorizing training data and focusing on reducing the risk of memorization.

Regarding the memorization risk of LLMs, models can produce different outputs for the same input and in some cases, directly repeat phrases from the training data. When containing personal information, this can lead to privacy violations. Furthermore, the presence of copyrighted content in outputs poses an additional challenge for developers. To address this need, differential privacy comes into play by adding controlled noise to the training process, preventing data from being memorized directly.

In terms of performance and resource balance, differential privacy can affect the model’s accuracy rate and computational requirements. The Google team examined how this approach alters the scaling laws of artificial intelligence models. Results showed that performance depends on the noise-data ratio; higher noise reduces output quality, but this can be balanced with more computing power or additional data.

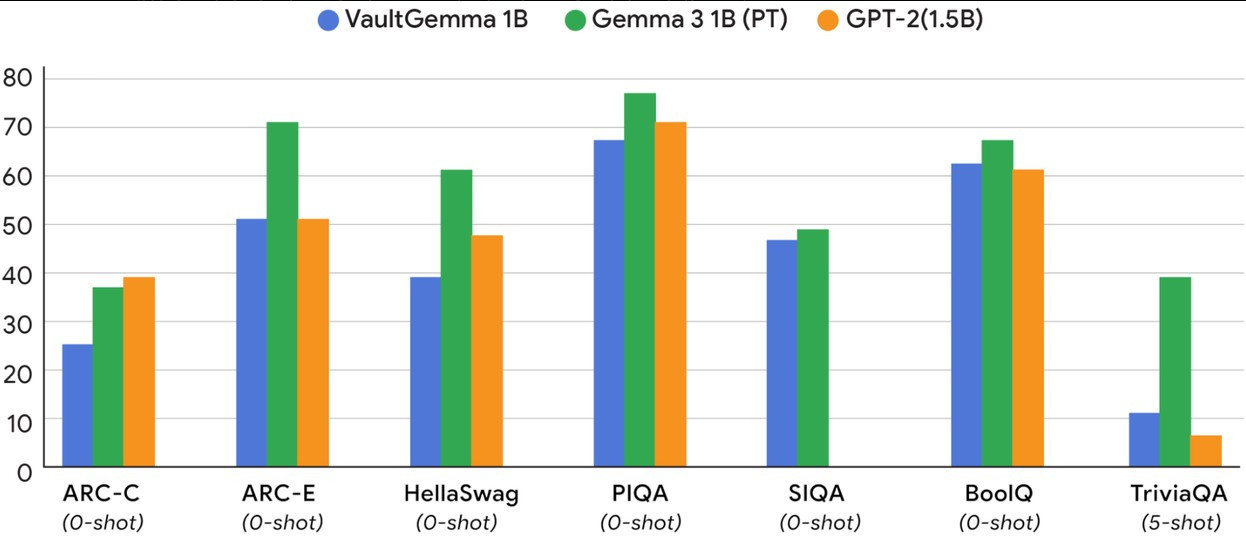

VaultGemma: Google’s new privacy-focused model As a result of these studies, Google introduced an open-weighted model called VaultGemma. VaultGemma reduces memorization risk through differential privacy and stands out as an experimental step toward Google’s privacy-focused AI strategy. The model is based on Google’s Gemma 2 architecture and has approximately 1 billion parameters. While not as powerful as large-scale models, VaultGemma performs close to standard models of the same size and demonstrates significantly better performance than non-specialized models. Credit: Google

More effective for smaller models The study emphasizes that differential privacy is especially suitable for smaller LLMs designed for specific tasks rather than large, general-purpose models. This could allow companies to develop privacy-focused solutions for targeted AI features.

Available for use VaultGemma is currently available for download via Hugging Face and Kaggle. Although the weights are openly shared, it is not provided entirely as open source, but Google allows users to adapt and distribute the model according to their needs. However, compliance with licensing conditions is required to prevent misuse.